Available in Rancher Manager is the option ‘Continuous Delivery’ and that’s the preferred deployment method by SUSE/Rancher. Although companies frequently use Argo-CD, the Fleet deployment is already built in and easy to use. Rancher itself is using fleet for the Rancher Agent deployment to the downstream clusters and therefore it is a mandatory tool in Rancher-managed clusters. In this blog, I will explain how to set up a GitRepo and how to monitor these, so you can easily manage your fleet. The GitRepo is required as Fleet will use git repos to detect changes by commits.

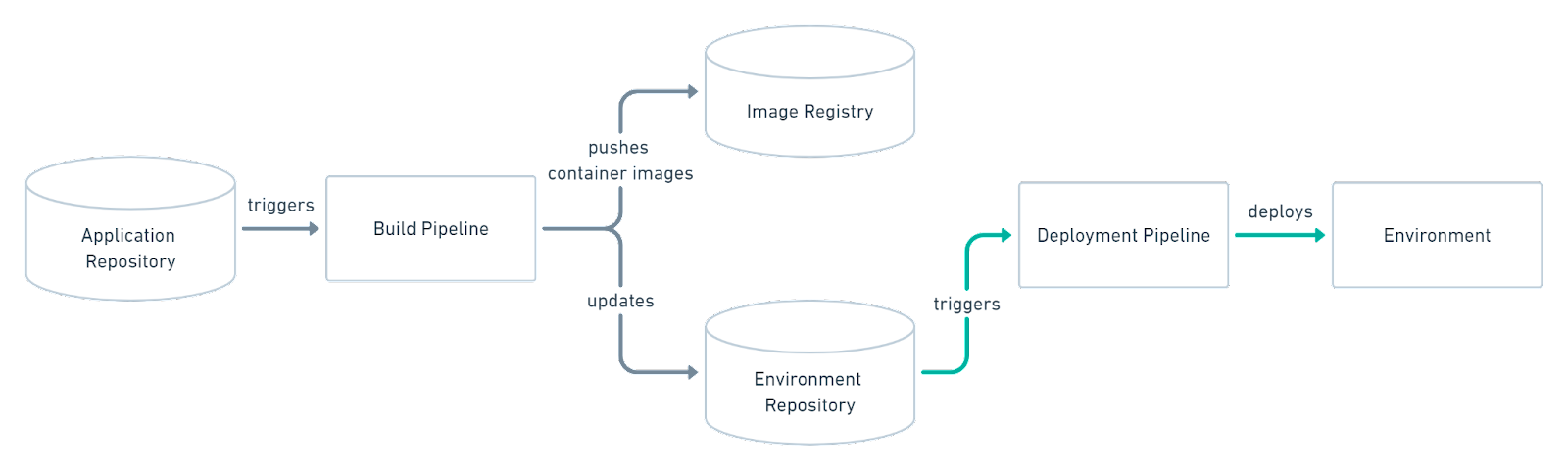

GitOps is the process that triggers a deployment from a git repository to a Kubernetes cluster. The trigger is activated by a commit of the branch in the git repository. In a Push method the commit will activate a pipeline, as an example in GitLab one can run the .giltlab-ci.yml script when there is a merge request approved.

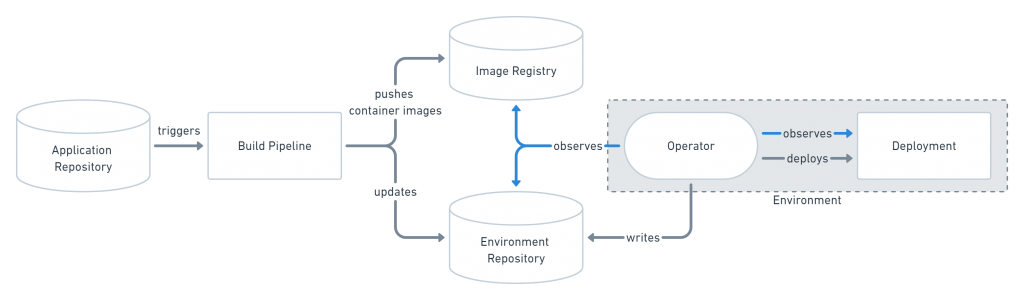

In a Pull Method, an operator will continuously observe the repository and when there is a commit done, the operator will pull the repository files or image. In Rancher, it is Fleet that runs the pull method.

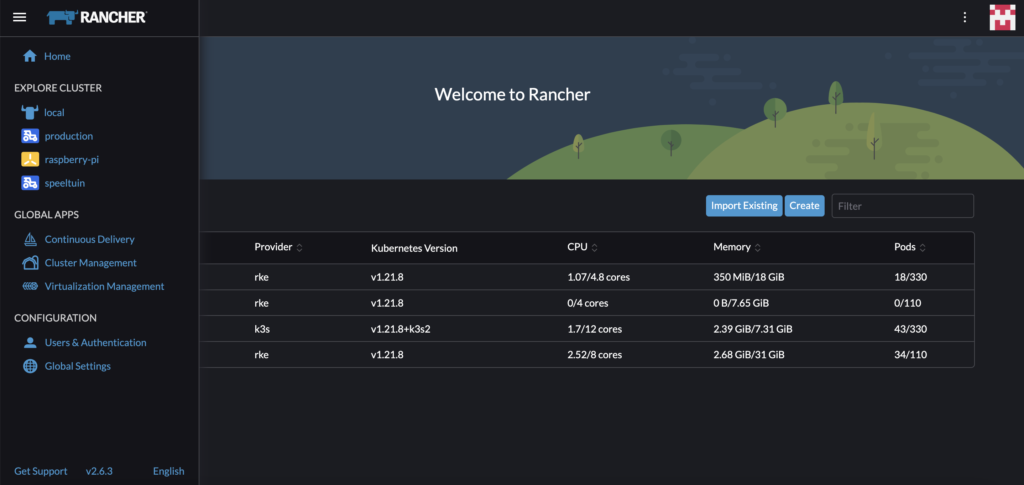

Using Rancher Manager, the GitOps process used by Fleet can be found in Continuous Delivery:

Workspaces

GitRepos are added by Fleet Manager and are namespaced by using so-called workspaces. Additional workspaces can be created to isolate resources for specific clusters e.g. use a dedicated service account for deployments, secrets or config maps. Users can move their clusters across workspaces but keep the Rancher local cluster in ‘fleet-local’ as recommended by SUSE.

In a default installation there are two pre-defined workspaces:

- fleet-local

- fleet-default

fleet-local is used by the local Rancher cluster or when using a single cluster.

fleet-default is the workspace for the downstream clusters.

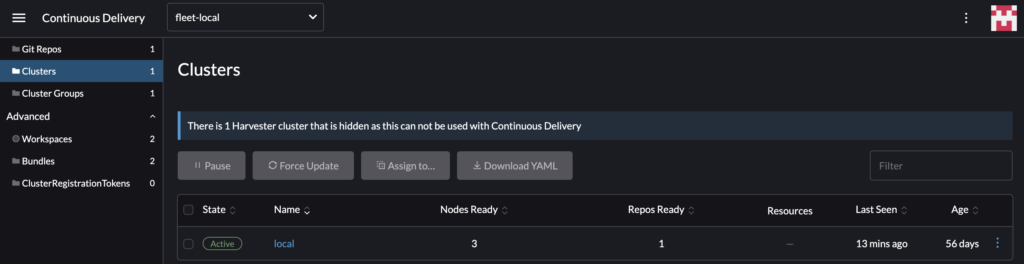

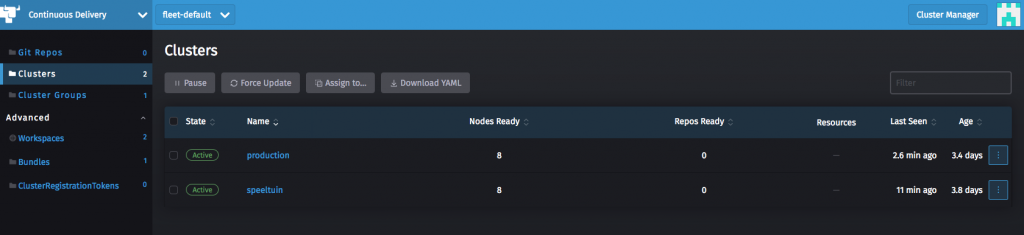

Clusters

Clusters are automatically assigned to the appropriate workspace. It is also possible to create new workspaces and assign clusters to a new workspace.

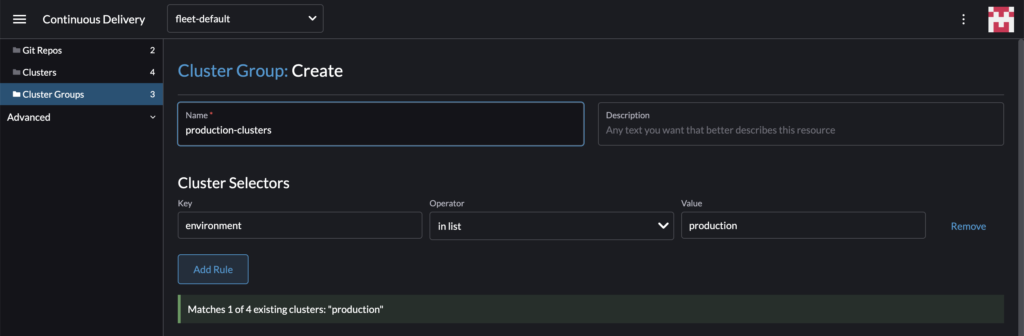

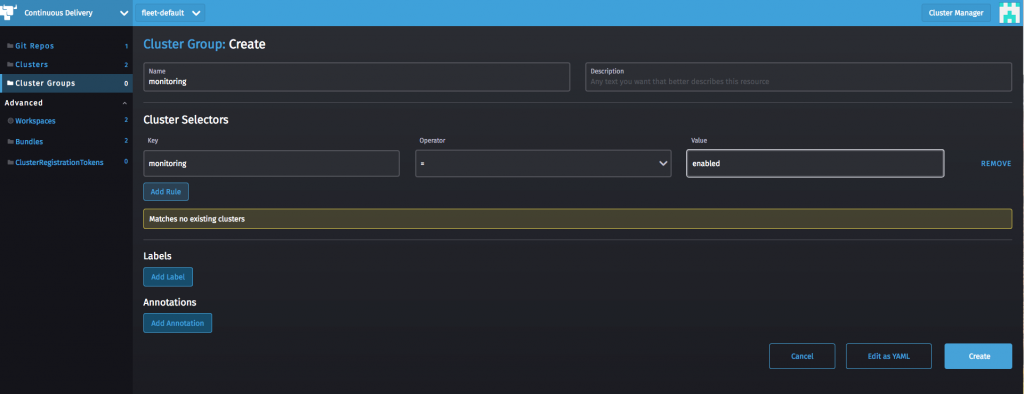

Cluster Groups

Cluster groups can be defined to deploy applications on a group of clusters. To use such a group, the cluster is selected by a cluster label, eg. ‘environment=production’

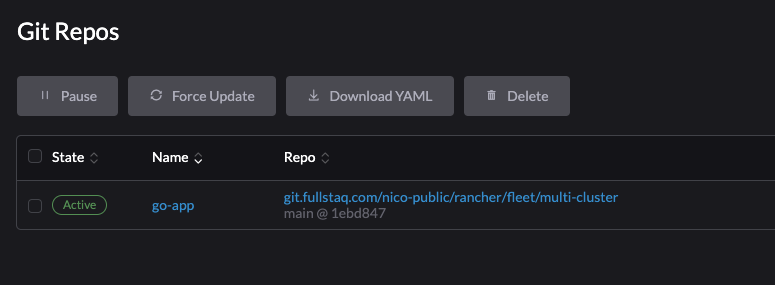

Git Repos

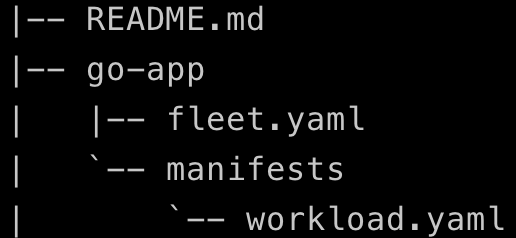

In this menu option, you’ll find the git repositories of the deployments. As a very simple example, I will show you a go-application from my git repository which will be deployed on a cluster called ‘production’. The structure of the repository looks like this:

In the example, fleet.yaml only tells fleet to which namespace to deploy the application.

The fleet.yaml looks like this:

namespace: go-app

In the manifests directory is the actual workload.yaml that contains the deployment, the service and an ingress.

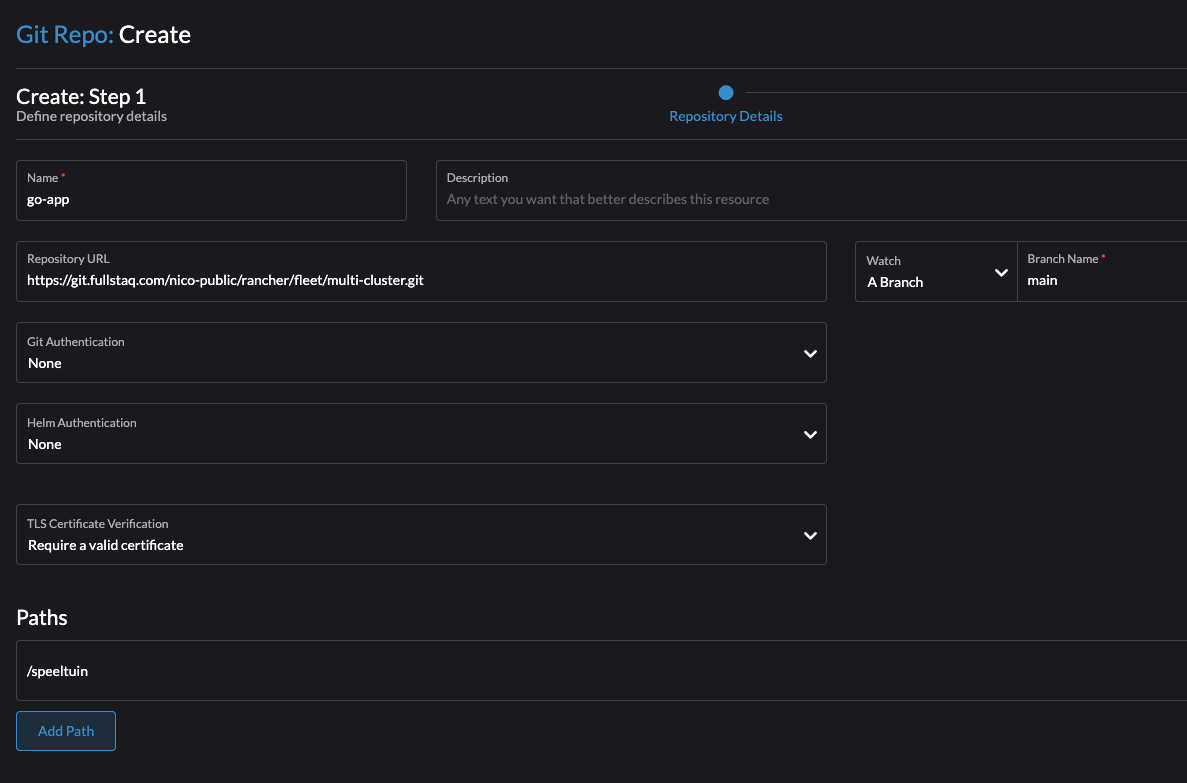

Let’s create our first GitRepo

Step 1: In the Git Repos create-option in Rancher, the ‘go-app’ can be created, The ‘Repository URL’ is ‘https://git.fullstaq.com/nico-public/rancher/fleet/multi-cluster.git’ and this repository’s ‘Branch’ is ‘main’. When using directories in your Git repository to separate the environments, use the directory in ‘Add Path’ for the environment you want to use.

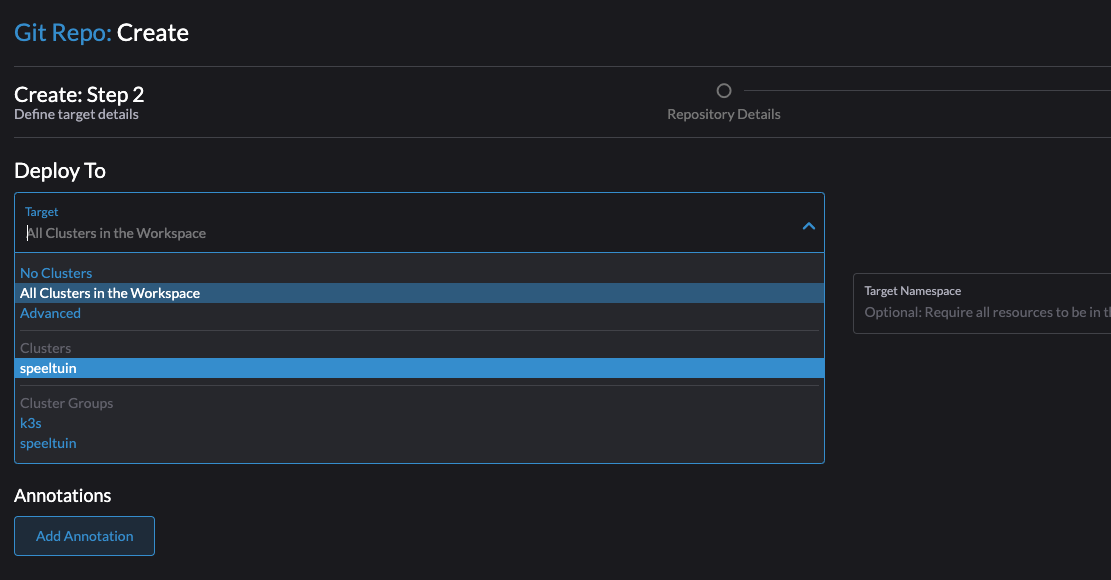

Step 2: In ‘Deploy To’ select the cluster from the pull-down.

After ‘Create’, fleet will read the repository and use the manifests file to deploy what’s defined in it. After a few seconds, there will be a so-called ‘Bundle’ for this application and the GitRepo will be changed to Active. Bundles are a collection of resources that get deployed to a cluster.

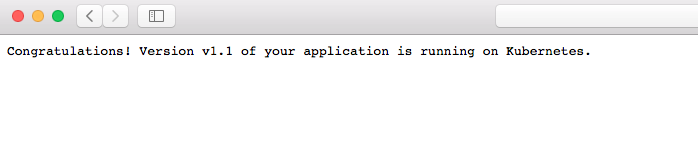

Check the ingress URL in a browser will show the application is working:

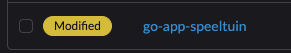

When some of the resources are modified on the cluster without updating the git-repo, the Fleet bundle indicates that it is modified:

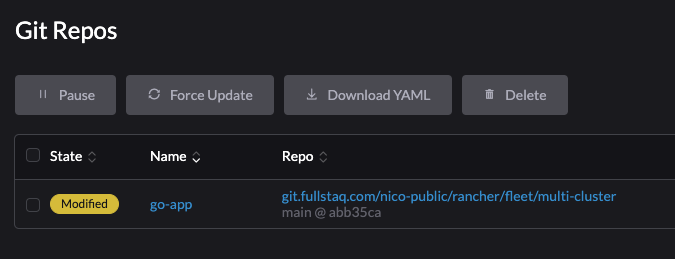

as will the state of the GitRepo:

Two things can be done to solve this issue:

Option 1: Undo the change on the Kubernetes cluster

Option 2: Update the Git repository to match the state of the resources in Kubernetes.

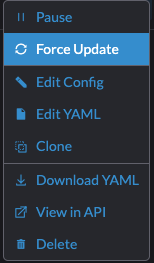

The first option is to force an update of the Kubernetes resource according to the GitRepo

The other option is to create a feature branch, commit and merge it with the main branch and then Fleet will pick it up.

After the modification has been completed by Fleet or by K8s, the GiRepo goes back to the ‘Active’ state:

An auto-force update (like the AutoSync feature of ArgoCD) is not available at this time. However, a ‘forceSync’ can be patched on the GitRepo with a kubectl command. And this command could then be scheduled. The command with the kubectl context to the local (Rancher) cluster looks like this:

kubectl patch gitrepos.fleet.cattle.io -n [workspace name] [GitRepo name] -p '{"spec":{"forceSyncGeneration":'$(($(kubectl get gitrepos.fleet.cattle.io -n [workspace name] [GitRepo name] -o jsonpath={.spec.forceSyncGeneration})+1))}}'' --type=merge

An example for the go-app in fleet-default:

kubectl patch gitrepos.fleet.cattle.io -n fleet-default go-app -p '{"spec":{"forceSyncGeneration":'$(($(kubectl get gitrepos.fleet.cattle.io -n fleet-default go-app -o jsonpath={.spec.forceSyncGeneration})+1))}}'' --type=merge

Using a Cluster Group

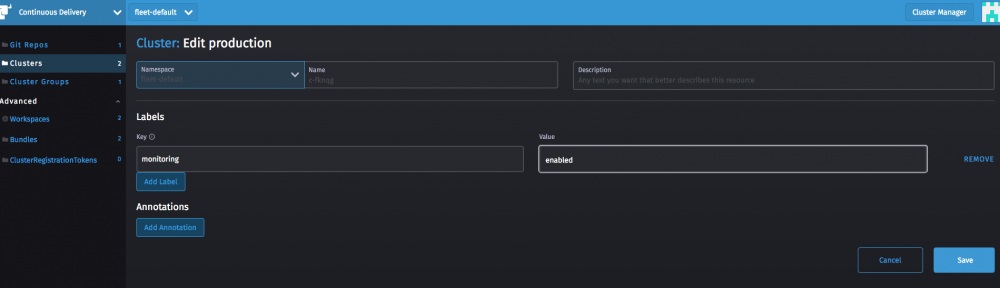

By using a Cluster Group, we can apply deployments to multiple clusters. As an example, install Rancher Monitoring on clusters with the label ‘monitoring=enabled’

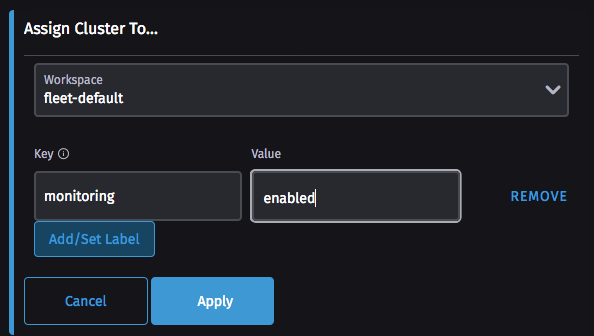

As you can see, there is no cluster with that label but we can create that here by using

- The ‘Assign to’ option for a cluster

- Create a label via the ‘Edit as Form’ on a cluster

Option 1

Option 2

This also means that whenever a new cluster is created, with the label ‘monitoring=enabled’ the monitoring application will be created by fleet on that new cluster.

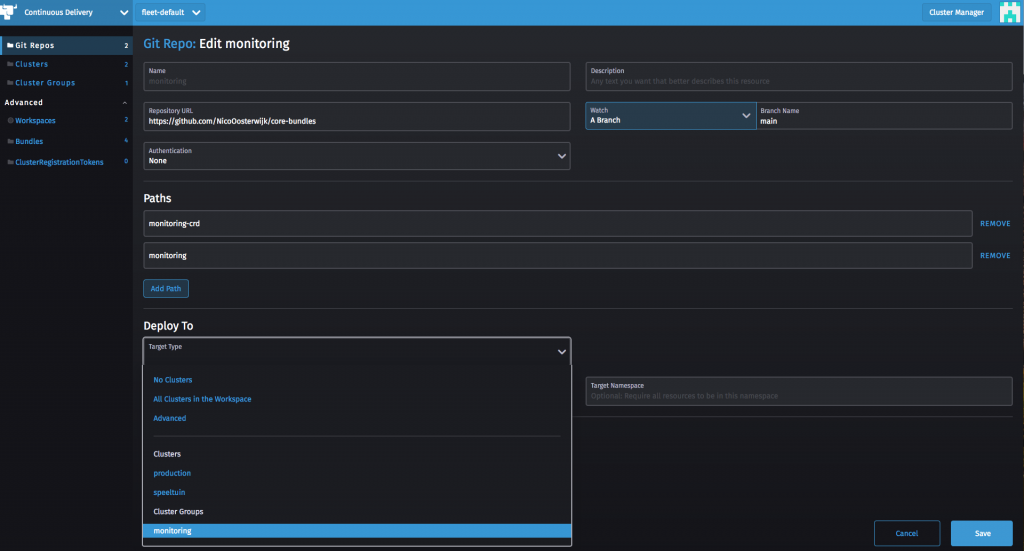

The only thing needed now is the monitoring ‘Git Repo’ so we will create that from a GitHub repository.

As you can see, there are two ‘Paths‘ defined, one for the CRD and one for the deployment. The ‘Deploy To’ option now points to the cluster group called ‘monitoring’.

The monitoring Git Repo is using the file fleet.yaml with Helm as the deployment method as you can see in the GitHub repositories https://github.com/NicoOosterwijk/core-bundles/tree/main/monitoring-crd and https://github.com/NicoOosterwijk/core-bundles/tree/main/monitoring

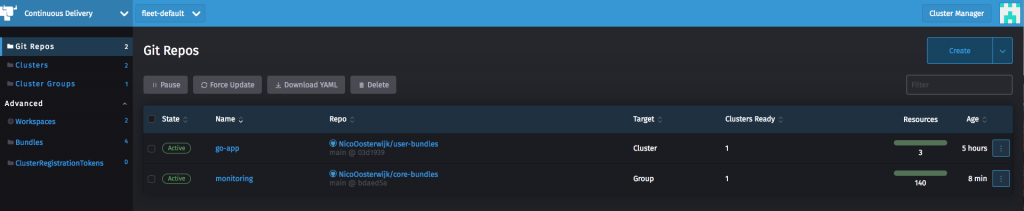

When the deployment is created, there will be two active Git Repo’s, one for the go-app and one for monitoring:

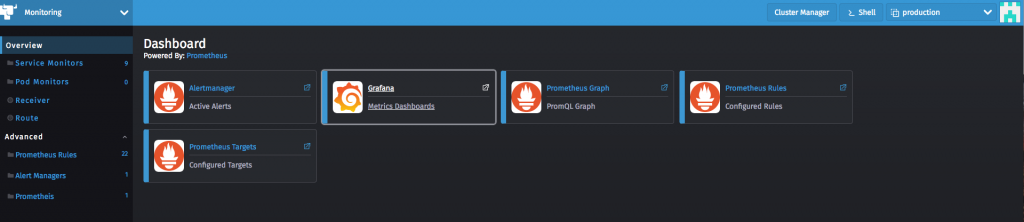

From within Rancher, monitoring can be used for Prometheus and for Grafana.

Multi-Cluster Kustomize Example

This example will deploy a GO-application using kustomize. The app will be deployed into the go-app namespace.

The application will be customized as follows per environment:

- hp-server cluster: Using the AMD64 architecture image.

- raspberry-pi cluster: Using the ARM64 architecture image.

The fleet.yaml file looks like this:

kind: GitRepo

apiVersion: fleet.cattle.io/v1alpha1

metadata:

name: go-app-multi-cluster

namespace: fleet-default

spec:

branch: main

repo: https://github.com/NicoOosterwijk/fleet.git

paths:

- multi-cluster

targets:

- name: hp-server

clusterSelector:

matchLabels:

name: hp-server

- name: raspberry-pi

clusterName: raspberry-pi

There is one branch in de repo called main that has a directory multi-cluster with a fleet.yaml file and the targets are selected by a matchLabels or a clusterName.

In the fleet.yaml file, kustomize is used to use the overlays for the different clusters so the image to be used for different processor architecture is patched to match that of the clusters.

The fleet.yaml looks like this:

kind: GitRepo

apiVersion: fleet.cattle.io/v1alpha1

metadata:

name: go-app-multi-cluster

namespace: fleet-default

spec:

branch: main

repo: https://github.com/NicoOosterwijk/fleet.git

paths:

- multi-cluster

targets:

- name: hp-server

clusterSelector:

matchLabels:

name: hp-server

- name: raspberry-pi

clusterName: raspberry-pi

The customization is changing the image to be used:

apiVersion: apps/v1

kind: Deployment

spec:

template:

spec:

containers:

- name: go-app

image: nokkie/go-app:1.1

And the deployment from the base will do the rest. Check this repository for more details: https://git.fullstaq.com/nico-public/rancher/fleet/multi-cluster.git

Conclusion

- Fleet can be used to deploy deployments on single (downstream) clusters with Kubernetes manifests or Helm charts.

- Fleet can be used to select a group of clusters to deploy Rancher Apps like monitoring by using the Helm chart provided by Rancher.

- Fleet can be used to deploy applications for a multi-cluster environment using kustomize to patch deployments where needed.

- An auto-force-update (like the AutoSync feature of ArgoCD) is not available at this time. However, a ‘forceSync’ can be patched using kubectl.

My inspiration came from https://fleet.rancher.io/ where there is lots more to read and learn about Fleet. Have fun!